Cipp Model Of Program Evaluation Ppt

Download Presentation PowerPoint Slideshow about 'Needs Analysis, Program Evaluation, Program Implementation, Survey Construction, and the CIPP Model' - chenoa An Image/Link below is provided (as is) to download presentation Download Policy: Content on the Website is provided to you AS IS for your information and personal use and may not be sold / licensed / shared on other websites without getting consent from its author.While downloading, if for some reason you are not able to download a presentation, the publisher may have deleted the file from their server. According to McKillip (1987), 'Needs are value judgments that a target group has problems that can be solved' (p. Needs analysis, involving the identification and evaluation of needs, is a tool for decision making in the human services and education. Decisions can be varied, including topics such as resource allocation, grant funding, and planning. In other words:.

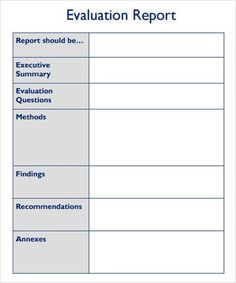

•Explanation of CIPP Program Evaluation Model • What gets measured gets done. •Increases understanding about program •Guides program evaluation design and.

Needs Analysis is a process of evaluating the problems and solutions identified for a target population. In this process, it emphasizes the importance and relevance of the problems and solutions. This model defines Needs Analysis as a feedback process used by organizations to learn about and to adapt to the needs of their client populations. A marketing strategy of needs analysis has three components:.

Selection of the target population, those actually or potentially eligible for the service and able to make the necessary exchanges. Choice of competitive position, distinguishing the agency's services from those offered by other agencies and providers. Development of an effective marketing mix, selecting a range and quality of services that will maximize utilization by the target population. This model is an adaptation of “Multi-Attribute Utility Analysis” (MAUA) to problems of modeling and synthesis in applied research.

Daniel Stufflebeam Cipp Evaluation Model

The Decision-Making model has three stages:. Problem modeling: In this stage, identification of the need takes place. The decision problem is conceptualized by options and decision attributes. Quantification: In this stage, measurements contained in the need identification are transformed to reflect the decision makers’ values and interests. Synthesis: In this stage, an index that orders options on need will be provided.

This index also gives information on the relative standing of these needs. Bradshaw identified four types of outcome expectations that support judgments of needs (McKillip, 1987):. Normative need: Expectations based on expert identification of adequate levels of performance or service. (This type of expectations may miss real needs of target population.). Felt need: Expectations that members of a group have for their own outcomes (e.g., parents’ expectations about the appropriate amount of elementary level mathematics instruction). Expressed need: Expectations based on behavior of a target population.

Expectations are indicated by use of services (e.g., waiting lists, enrollment pressure, or high bed occupancy rates). Comparative need: Expectations based on the performance of a group other than the target population.

(Comparative expectations mainly depend on the similarity of the comparison group and the target population. In addition, such expectations can neglect unique characteristics that invalidate generalizations.). Further, in planning needs analysis/assessment (or any other research), it is important to consider multiple measures (e.g., different types of measures for the same construct) and different methods of assessment (e.g., client surveys – questionnaires, key informant interviews; McKillip, 1987). Based on the characteristics of needs analysis, the major concepts of participatory action research (PAR) that involve consumers in the planning and conduct of research can be considered in needs analysis. History of Program Evaluation.

Primary purpose traditionally has been to provide decision makers with information about the effectiveness of some program, product, or procedure. Has been viewed as a process in which data are obtained, analyzed, and synthesized into relevant information for decision-making. Has developed in response to the pressing demands of the rapidly changing social system that started in the 1930s. The attempts by evaluators to meet these demands have resulted in the development of decision-oriented models.

A particularly important goal of research in natural settings is program evaluation. “Program evaluation is done to provide feedback to administrators of human service organizations to help them decide what services to provide to whom and how to provide them most effectively and efficiently” (Shaughnessy & Zechmeister, 1990, p. Program evaluation represents a hybrid discipline that draws on political science, sociology, economics, education, and psychology. Thus, persons (e.g., psychologists, educators, political scientists, and sociologists) are often involved in this process (Shaughnessy & Zechmeister, 1990).

“Evaluation research refers to the use of scientific research methods to plan intervention programs, to monitor the implementation of new programs and the operation of existing ones, and to determine how effectively programs or clinical practices achieve their goals”. “Evaluation research is means of supplying valid and reliable evidence regarding the operation of social programs or clinical practices-how they are planned, how well they operate, and how effectively they achieve their goals” (Monette, Sullivan, & DeJong, 1990, p. Naturalistic Inquiry.

Defined as slice-of-life episodes documented through natural language representing as closely as possible how people feel, what they know, how they know it, and what their concerns, beliefs, perceptions, and understandings are. Consists of a series of observations that are directed alternately at discovery and verification. Came about as an outgrowth of ecological psychology. Has been used for many purposes and applied in different orientations, including education and psychology. The perspective and philosophy make this method ideally suited to systematic observation and recording of normative values. Participatory Action Research (PAR). Some of the people in the organization under study participate actively with the professional researcher throughout the research process from the initial design to the final presentation of results and discussion of their action implications.

In rehabilitation and education, this paradigm would potentially involve all of the stakeholders - consumers, parents, teachers, counselors, community organizations, and employers. Note: Remember that not all PAR evaluation is qualitative. A design is a plan which dictates when and from whom measurements will be gathered during the course of the program evaluation. Two types of evaluators:. Summative evaluator: responsible for a summary statement about the effectiveness of the program. Formative evaluator: helper and advisor to the program planners and developers. The critical characteristic of any one evaluation study is that it provide the best possible information that could have been collected under the circumstances, and that this information meet the credibility requirements of its evaluation audience.

Some general rules for designing good survey instruments. The strength of survey research is asking people about their firsthand experiences: what they have done, their current situations, their feelings and perceptions. Questions should be asked one at a time. A survey question should be worded so that all respondents are answering the same question. If a survey is to be interviewer administered, wording of the questions must constitute a complete and adequate script such that when the interviewer reads the question as worded, the respondent will be fairly prepared to answer the question.

All respondents should understand the kind of answer that constitutes an adequate answer to a question. Survey instruments should be designed so that the tasks of reading questions, following instructions, and recording answers are as easy as possible for interviewers and respondents.